Streamline, Optimize, and Succeed: Solving modern and complex workflows with AI/LLM

Bloomberg, October 21, 2024: Microsoft Launches AI Agents, Deepening Rivalry With Salesforce; “The Redmond, Washington-based software maker said Monday it would roll out 10 “autonomous agents” to complete tasks on behalf of people in areas such as sales, customer support and accounting”

Bloomberg, October 24, 2024: AI Agents Have Officially Entered the Workplace, Flaws and All; “Businesses are embracing AI agents that ‘don’t eat’ and can work 24/7”

CNBC, October 22, 2024: Amazon-backed Anthropic debuts AI agents that can do complex tasks, racing against OpenAI, Microsoft and Google.

Salesforce, September 12, 2024: Salesforce Unveils Agentforce — What AI Was Meant to Be.

AI chatbots use generative AI to provide responses based on a single interaction. A person makes a query and the chatbot uses natural language processing to reply.

The next frontier of artificial intelligence is Agentic RAG and agentic AI, which uses sophisticated reasoning and iterative planning to autonomously solve complex, multi-step problems. And it’s set to enhance productivity and operations across industries.

Here’s a breakdown of their differences:

Source:markovate

Agentic AI

Agentic AI refers to systems capable of performing tasks on their own, making decisions, taking actions, and adapting to new information or changing situations without needing direct human input. These systems are designed for autonomous decision-making and can handle tasks across different domains. For example, tools like OpenAI's AutoGPT or ChatGPT with plug-ins can independently search for information, plan tasks, or execute actions, showcasing their ability to function with minimal supervision.

Applications:

Personal assistants (scheduling, emailing, etc.)

Autonomous operations in finance, healthcare, or logistics

Game-playing AIs like AlphaGo

Agentic RAG (Retrieval-Augmented Generation)

Agentic RAG (Retrieval-Augmented Generation) combines large language models (LLMs) with external knowledge retrieval to handle tasks that require real-time or external data. This allows the AI to autonomously retrieve, analyze, and synthesize information, generating accurate and contextually relevant answers. Its core functionality lies in blending text generation with information retrieval, making it highly effective for knowledge-intensive tasks. For example, an LLM-powered assistant using Agentic RAG can retrieve company documents to answer customer support queries or create detailed reports, showcasing its precision and utility in data-driven workflows.

Applications:

Legal document analysis

Enterprise knowledge management(search, Q&A etc)

Research and analytics

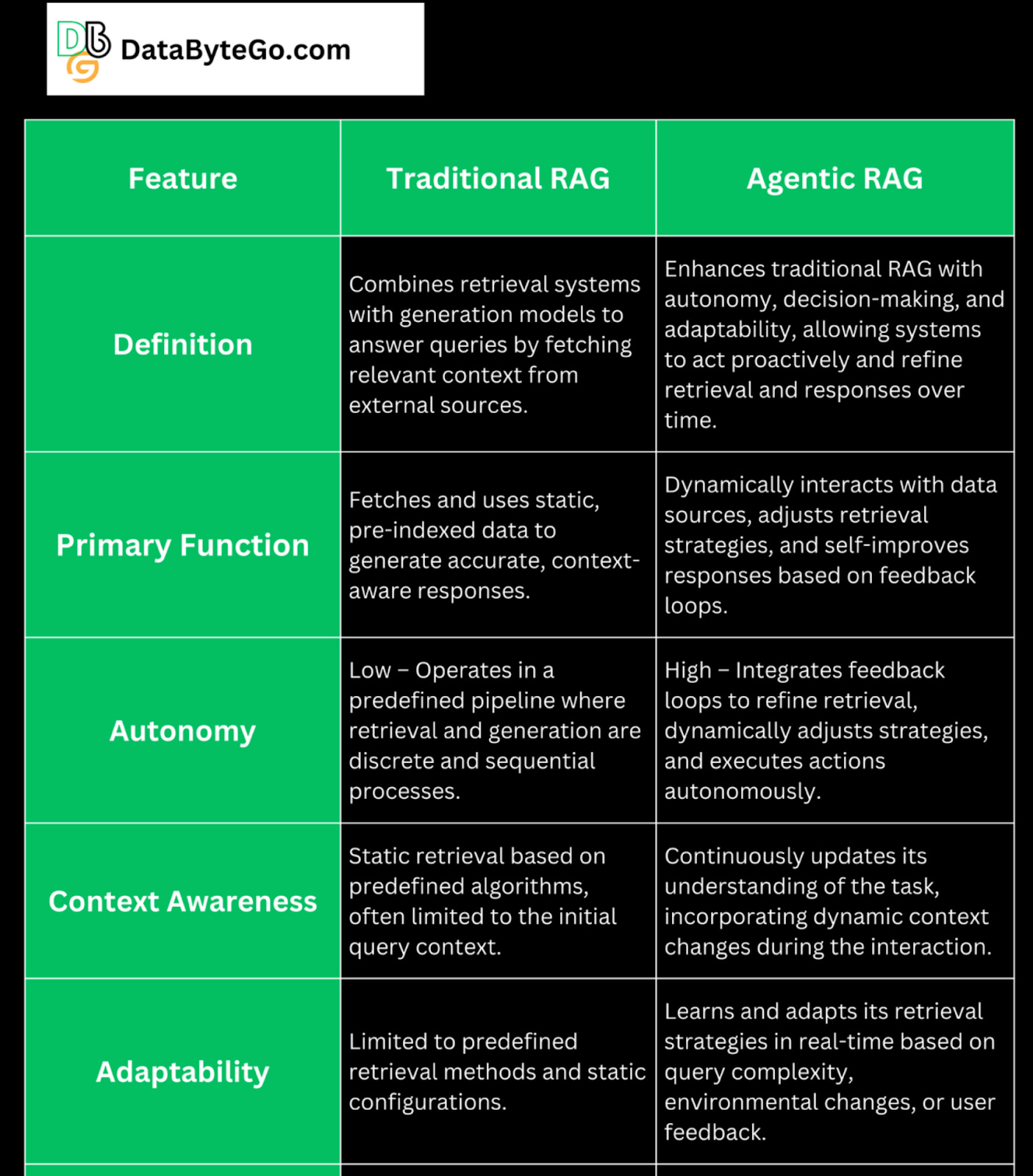

The Evolution of RAG: From Traditional to Agentic

Retrieval-Augmented Generation(RAG) is a process of optimizing the output of the Large Language Model , so it references an external knowledge source(documents, pdfs etc) or knowledge base outside it's training data sources before generating a response.

The launch of ChatGPT in December 2022 was a significant turning point for RAG. Since then, RAG has used it to leverage external documentation or knowledge sources with the reasoning capabilities of large language Models(LLM).

What is Retrieval Augmented Generation (RAG)?

RAG, or Retrieval-Augmented Generation, is a framework that combines two main approaches in natural language processing: retrieval and generation.

It involves retrieving relevant information from a large dataset(Non structured or semi structured data) and then using that information to enhance the generation of text-based outputs, such as summaries or answers to questions.

This combination aims to improve the quality and relevance of generated text by providing additional context from retrieved sources.

Why is Retrieval-Augmented Generation important?

Retrieval-Augmented Generation (RAG) is important because it addresses several challenges faced by Large Language Models (LLMs), which are used in AI for chatbots and natural language processing:

Reducing False Information: Sometimes, LLMs answer questions with false information, especially when they don't know the answer. RAG helps by guiding the LLM to find and use reliable information.

Keeping Information Current: LLMs often have a knowledge cutoff, meaning they can't provide the latest information. RAG connects the LLM to up-to-date sources, ensuring responses are current and relevant.

Using Trustworthy Sources: Without RAG, LLMs might create responses from sources that aren't reliable. RAG ensures the LLM uses authoritative and trustworthy sources, improving the accuracy of its responses.

Clarifying Terminology Confusion: LLMs can get confused when the same words mean different things in different contexts. RAG helps by retrieving information from sources that use terminology accurately and consistently.

Think of an LLM like a keen new employee who's eager to answer every question confidently but isn't always well-informed. This can harm user trust. RAG helps this "employee" to stay informed by guiding them to reliable, current information, thereby improving trust and accuracy in chatbot responses.

Limitations of traditional RAG

Traditional Retrieval Augmented Generation (RAG) systems have revolutionized AI by combining Large Language Models (LLMs) with vector databases to overcome off-the-shelf LLM limitations. However, these systems face challenges while multi-tasking and are not suitable for complex use cases. It is okay until you are building simple Q&A chatbot, support bots, etc but as soon the things get a little complex, the traditional RAG approach fails. They often struggle with contextualizing retrieved data, leading to superficial responses that may not fully address query nuances.

Introducing Agentic RAG

Agentic RAG emerges as an evolution of traditional RAG, integrating AI agents to enhance the RAG approach. This approach employs autonomous agents to analyze initial findings and strategically select effective tools for data retrieval. These AI agents have the capability to breakdown the complex task into several subtasks so it becomes easy to handle. They also possess the memory (like chat history) so they know what has happened and what steps needs to be taken further.

Also, these AI agents are so smart they can call any API or tool whenever there is a requirement to solve the tasks. The agents can come up with logic, reasoning and take actions accordingly. This is what makes an agentic RAG approach so prominent. The system deconstructs complex queries into manageable segments, assigning specific agents to each part while maintaining seamless coordination.

Key benefits and use cases of Agentic RAG

Agentic RAG offers numerous advantages over traditional systems. Its autonomous agents work independently, allowing for efficient handling of complex queries in parallel. The system's adaptability enables dynamic adjustment of strategies based on new information or evolving user needs. In marketing, Agentic RAG can analyze customer data to generate personalized communications and provide real-time competitive intelligence. It also enhances decision-making in campaign management and improves search engine optimization strategies.

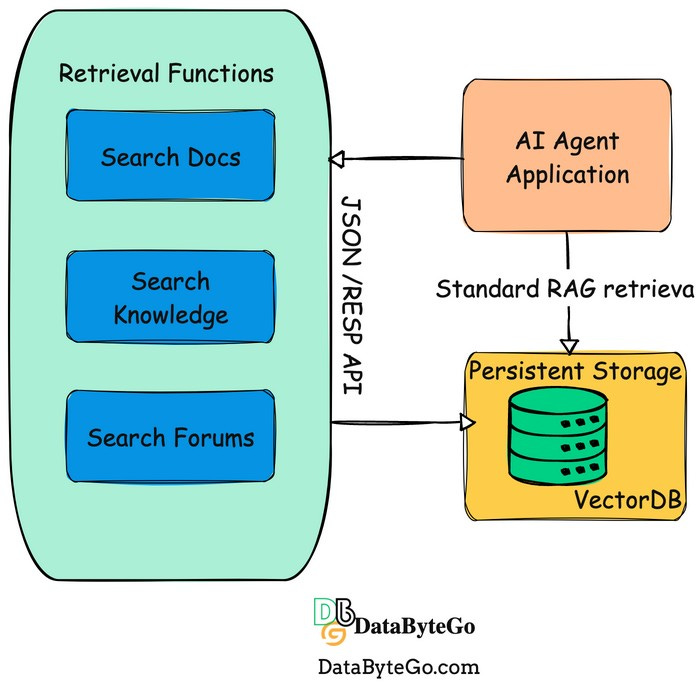

The key to agentic RAG systems: retrieval functions

Getting down to brass tacks, the key design difference between building traditional RAG systems and agentic RAG systems is the idea of retrieval functions. You still need RAG pipelines to populate your vector databases. But as you’re building your search indexes, you need to think ahead to the functions that will create an interface to your search indexes. These functions need to be designed to anticipate the actions your AI agent will need to take to solve the problems you want it to solve.

Designing functions to optimize the retrieval process

In one of the first agentic RAG implementations I tried, I created a function that was a passthrough to the vector database I was using.

I loaded all three of my unstructured data sources into a single vector index and populated metadata to let me retrieve chunks from multiple documents within each system. For example, setting the metadata filter source=docs allowed me to limit my search to context from the product documentation.

My initial findings with this approach were quite poor. The function calls I got from the LLM with the passthrough approach often made no sense at all. It would set metadata filters to search for documentation in the community forums. It would use various metadata fields in odd combinations that didn’t work well.

As you can imagine, when the retrieved data is bad, the generation process is going to be poor as well.

Source- nvidia

Fueling Agentic AI With Enterprise Data

Across industries and job functions, generative AI is transforming organizations by turning vast amounts of data into actionable knowledge, helping employees work more efficiently.

AI agents build on this potential by accessing diverse data through accelerated AI query engines, which process, store and retrieve information to enhance generative AI models. A key technique for achieving this is RAG, which allows AI to tap into a broader range of data sources.

Over time, AI agents learn and improve by creating a data flywheel, where data generated through interactions is fed back into the system, refining models and increasing their effectiveness.

Closing Thoughts: Making Work Easier with Agentic RAG

As we move deeper into the world of AI, Agentic Retrieval-Augmented Generation (RAG) systems are changing the way businesses work. They’re not just making things faster—they’re helping industries like finance, healthcare, and legal work smarter and more accurately.

Agentic RAG is a big step up from older systems. It’s not just about answering simple questions anymore. These systems can now handle tough problems on their own, by breaking them into smaller parts and using real-time data to make smart decisions. They keep improving as they learn, so they’re always ready to handle new challenges.

But, like any new technology, to really get the most out of Agentic RAG, businesses need to put the right tools in place. They also need to remember that AI is there to help humans, not replace them. It’s also important to make sure the AI works in a way that’s safe and fair.

The future of work is going to be full of AI agents. They will help businesses move faster, make better decisions, and stay ahead of the competition. If companies use Agentic RAG the right way, they’ll be able to do things better, smarter, and faster. It’s an exciting time for both businesses and the people who work in them.

References

![[AI/LLM Series] Building a Smarter Data Pipeline for LLM & RAG: The Key to Enhanced Accuracy and Performance](https://substackcdn.com/image/fetch/$s_!jaF_!,w_140,h_140,c_fill,f_auto,q_auto:good,fl_progressive:steep,g_auto/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F31f96679-3b4c-4cc3-95e7-f2c29c6c2111_1219x588.jpeg)